Human-centered Physical Intelligence (HPI) Lab

The HPI Lab is part of the Department of Artificial Intelligence at Hanyang University ERICA.

Our mission is to develop human-centered physical AI agents that can perceive, act, and interact in the real world, enabling intelligent systems to provide safe and beneficial services to humans and society.

To achieve this, our research advances 3D environment perception, robotic action learning, and human–AI–robot interaction, with the goal of building physical AI agents that are adaptive, robust, and socially acceptable in real-world environments such as autonomous driving, manufacturing, logistics, healthcare, and households.

Our research topics are (not limited to):

news

| Jan 31, 2026 | A paper about “A Dynamic Action Model-Based Vision-Language-Action Framework for Robot Manipulation” is accepted to ICRA 2026. |

|---|---|

| Sep 19, 2025 | A paper about “Active Test-time Vision-Language Navigation” is accepted to NeurIPS 2025. |

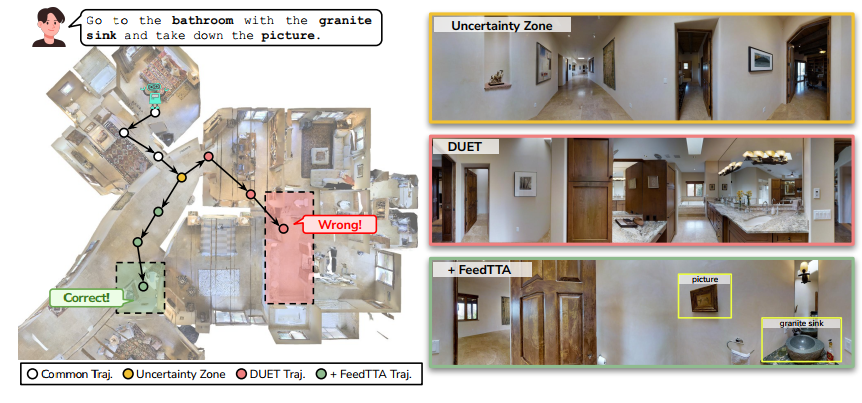

| May 02, 2025 | A paper about “Test-Time Adaptation for Online Vision-Language Navigation” is accepted to ICML 2025. |

| Feb 27, 2025 | A paper about “Efficient 3D Occupancy Prediction” is accepted to CVPR 2025. |

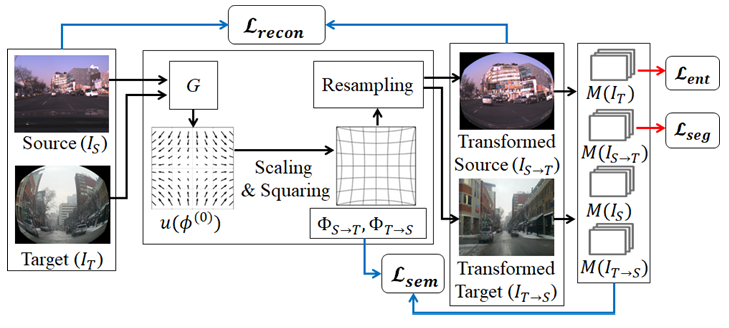

| Sep 26, 2024 | Two papers about “Unified Domain Generalization & Adaptation” and “Online HD-Map Construction” are accepted to NeurIPS 2024. |

| Dec 09, 2023 | A paper about “Cross-Modal and Domain Adversarial Adaptation” is accepted to AAAI 2024. |

| Sep 22, 2023 | A paper about “Structural and Temporal Cross-Modal Distillation” is accepted to NeurIPS 2023. |

| Sep 16, 2022 | A paper about “Modeling Cumulative Arm Fatigue in Mid-Air Interaction” is accepted to International Journal of Human-Computer Studies (IJHCS). |